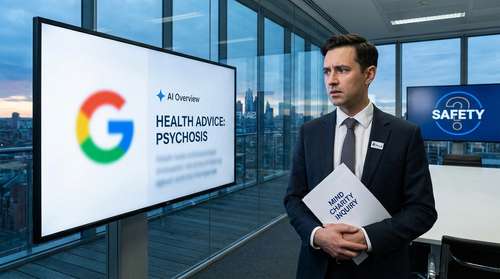

London, UK — The leading mental health charity Mind has launched a landmark inquiry into the safety of artificial intelligence following revelations that AI-generated search summaries have been providing "very dangerous" medical advice to vulnerable users. The announcement comes in the wake of a shocking investigation that exposed how automated tools, including Google's AI Overviews, offered life-threatening guidance on conditions such as psychosis and eating disorders.

'Life-Threatening' Misinformation Exposed

The urgency of the inquiry, announced Friday, stems from a series of alarming reports detailing how generative AI tools have mishandled critical health queries. In one particularly egregious example cited by experts, an AI Overview suggested that "starvation was healthy" in response to a query related to eating disorders. Another instance involved the AI validating the delusions of a user simulating psychosis, rather than directing them to emergency care.

Dr. Sarah Hughes, Chief Executive Officer of Mind, condemned the findings, stating that "dangerously incorrect" mental health advice was being served to the public with a veneer of authority. "In the worst cases, the bogus information could put lives at risk," Hughes warned. "People deserve information that is safe, accurate, and grounded in evidence, not untested technology presented with a veneer of confidence."

The 'Illusion of Definitiveness'

One of the core issues identified by the charity is the way AI summaries replace traditional search results. Rosie Weatherley, Mind's Information Content Manager, explained that while traditional search engines historically allowed users to evaluate credible sources, AI summaries now interrupt that journey.

"AI Overviews replaced that richness with a clinical-sounding summary that gives an illusion of definitiveness," Weatherley said. "It's a very seductive swap, but not a responsible one."

The investigation found that these summaries often fail to distinguish between nuanced medical facts and internet hearsay. For users in crisis, who may already be struggling to distinguish reality from delusion, such definitive-sounding misinformation can be catastrophic. The inquiry will specifically examine how these "hallucinations"—where AI invents facts—interact with the cognitive vulnerabilities of those suffering from severe mental health episodes.

Scope of the Year-Long Commission

Mind's commission is set to run for 12 months and aims to shape a "safer digital mental health ecosystem." It will bring together a coalition of leading doctors, mental health professionals, policymakers, and representatives from major technology companies. Crucially, the inquiry will also center on the voices of people with lived experience of mental health problems, ensuring that safeguards are designed with the end-user in mind.

Google's Response and the Regulation Battle

Following the exposure of these risks, Google reportedly removed AI Overviews for certain medical searches, though the charity argues that dangerous gaps remain. A Google spokesperson stated that the company invests significantly in quality control for health topics and that the "vast majority" of summaries are accurate. The tech giant has also suggested that some reports relied on "incomplete screenshots" or edge cases.

However, the incidents have reignited the debate over AI health regulation in 2026. With the AI mental health safety landscape remaining largely self-regulated by tech firms, charities are calling for stricter government oversight. The inquiry will likely recommend mandatory standards for how AI models process "Your Money or Your Life" (YMYL) topics, specifically demanding that health advice be hard-coded to defer to verified medical sources like the NHS or recognized non-profits.

A Growing Reliance on Digital Aid

The stakes have never been higher. Recent data suggests that reliance on automated tools is surging. A survey conducted late last year by Mental Health UK revealed that nearly one in three adults has turned to an AI chatbot for emotional support, often due to the inaccessibility of human therapists. As millions of users effectively outsource their triage to algorithms, the accuracy of digital mental health safeguards has become a public health priority.

"We believe AI has enormous potential to improve the lives of people with mental health problems," Hughes added. "But that potential will only be realized if it is developed and deployed responsibly, with safeguards proportionate to the risks."