In a packed Los Angeles courtroom this week, Meta CEO Mark Zuckerberg faced a grilling that could reshape the internet for decades. Testifying in a historic social media mental health lawsuit, Zuckerberg defended his company against allegations that Instagram and Facebook are deliberately engineered to exploit the developing brains of children. The trial, which centers on claims from a 20-year-old plaintiff identified only as "KGM," marks the first time the tech mogul has answered directly to a jury regarding the youth mental health crisis.

Inside the Courtroom: Zuckerberg denies "Addiction" Claims

Taking the stand on Wednesday, February 18, Zuckerberg remained steadfast in his refusal to accept the premise that his platforms are inherently addictive. Under intense questioning from plaintiff attorneys, he argued that while some young people may experience "problematic use," this does not constitute clinical addiction. When pressed on whether features like infinite scroll and variable reward schedules—often compared to slot machines—were designed to hook users, Zuckerberg reportedly pushed back, stating, "I don't think that applies here."

The defense's strategy hinges on distinguishing between deep engagement and harmful dependency. Zuckerberg and Instagram chief Adam Mosseri, who testified days earlier, have maintained that the science linking social media use directly to mental health disorders like depression and anxiety is inconclusive. "I think a reasonable company should try to help the people that use its services," Zuckerberg told the court, while simultaneously insisting that parental controls and existing safety tools are sufficient.

The "Design Defect" Argument: A New Legal Battleground

This Mark Zuckerberg trial 2026 is distinct from previous congressional hearings because of the legal theory at play. Instead of focusing on the content posted by users—which is largely protected under Section 230 of the Communications Decency Act—plaintiffs are attacking the design of the platforms themselves. Lawyers for KGM and the consolidated school districts argue that algorithms specifically programmed to maximize screen time constitute a product defect.

Key allegations include:

- Intermittent Variable Rewards: The dopamine-driven feedback loops of likes and notifications that keep users checking their phones.

- Infinite Scroll: The removal of stopping cues that allows users to consume content mindlessly for hours.

- Lack of Age Verification Enforcement: Internal documents cited during the trial suggested Meta knew millions of under-13 users were on Instagram but failed to purge them effectively.

The "KGM" Case and School District Lawsuits

The current bellwether trial focuses on KGM, who alleges that her addiction to Instagram and YouTube began at age nine, leading to severe depression, eating disorders, and suicidal ideation. Her attorneys argue that Meta prioritized growth over safety, ignoring internal red flags about Instagram addiction effects on kids. This narrative is echoed by hundreds of school districts also suing the tech giants, claiming they have been left to clean up the mess of a mental health epidemic that has drained educational resources.

Settlements and the Future of Social Media Regulation 2026

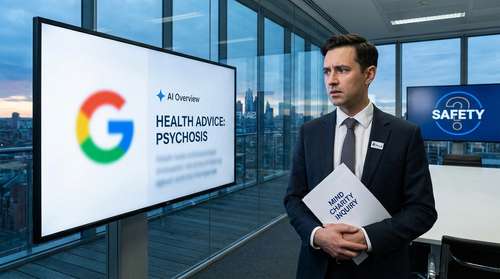

The landscape of the trial shifted dramatically just before opening statements. TikTok and Snap Inc. (parent company of Snapchat) reached last-minute confidential settlements, leaving Meta and Google (YouTube) to face the jury alone. Legal experts suggest these settlements might indicate that some platforms are eager to avoid the reputational damage of a public trial, especially concerning the TikTok mental health impact study data that plaintiffs were prepared to present.

If the jury finds Meta liable, it could trigger a wave of social media regulation 2026, forcing companies to fundamentally alter how their apps function. We could see the end of autoplay features for minors, mandatory friction points to break engagement loops, and stricter age verification protocols. For now, the eyes of the tech world remain fixed on Los Angeles, waiting to see if a jury will finally hold Silicon Valley accountable for the mental wellbeing of a generation.

Meta Child Safety Allegations: What Parents Need to Know

While the courtroom battle rages, parents are left wondering how to protect their families right now. The testimony highlighted that despite Meta's claims of robust safety tools, enforcement remains a challenge. Zuckerberg admitted on the stand that keeping under-13s off the platform is difficult because "people lie" about their age. This admission underscores the reality that technical safeguards are often porous.

As the trial continues through February, further revelations about internal decision-making at Meta are expected. For families navigating the youth mental health crisis, this case serves as a stark reminder that the apps in our pockets are not neutral tools, but sophisticated systems designed to command our attention—at almost any cost.